Last week I was struck by the headline:

GOOGLE AND OXFORD SCIENTISTS PUBLISH PAPER CLAIMING AI WILL “LIKELY” ANNIHILATE HUMANKIND “AN EXISTENTIAL CATASTROPHE IS NOT JUST POSSIBLE, BUT LIKELY.” https://futurism.com/the-byte/google-oxford-paper-ai-humankind. Articles about doomsday AI scenarios are almost commonplace daily now.

Initially, I just shared the story through my LinkedIn and Facebook channels. Then my friend, Theo Joubert, asked me what I thought about it. I responded:

I think AI is a huge danger. It’s not as acute as our politics or as inevitable as climate change, but AI, even in its current infancy, is already profoundly dangerous to humanity. That said, I am far less concerned with the future emergence of “Terminator” or “Big Brother” AI sentience, and far more concerned with the kinds of “super weapons” AI is already providing predatory people and corporations.

I’ve been concerned with this since the early ’80s. Back then I realized that future robotic machines (like I was in the business of creating for making PC parts) would lead to radically more sophisticated forms of automation. I saw the fearful faces of factory workers as they watched our crews assemble the machines that would replace them. Later from inside Apple, I watched as secretarial and office admin jobs were taken over by software programs. It’s long been clear that the upward spiral of cheap pervasive computing power will lead to replacing human jobs with machines everywhere it makes financial sense to do so. Money does what serves money.

My point is that most fear AI becoming a sentient autonomous agency. That may way well happen, but I am more concerned with what AI is already doing, right now today. Not as a separate thing or entity, but rather as a super weapon for predatory capitalism (manipulating buying behaviors) and predatory politics (manipulating voting behaviors).

In the wake of 911, the SF Airport was considering installing a new kind of face recognition-based security system. To get ahead of the inevitable privacy vs security controversy a lawyer for the company selling the new system began lobbying a California senator who in turn asked me to weigh in. We three began our discussion with concerns that the government, more specifically the FBI, could abuse it. My concerns differed. I was, and remain, more concerned with predators learning to use AI to become better predators.

The problem isn’t AI. It’s our near-universal acceptance of predatory economic and political behaviors. It’s using AI as a superweapon for predatory manipulation (more than AI being the predator) that concerns me.

“There is no greater injustice than to wring your profits from the sweat of another man’s brow.”

These are related pieces:

The corporate behaviors that outrage us are consequences of an underlying ethic we take for granted: https://bit.ly/3RWoCsc

The Three Codes: https://bit.ly/3Dvvp7P

The Challenge of Change: https://bit.ly/3DDxUF4

Robots are Coming: https://bit.ly/3BgLbki

My friend replied: How do we learn to deal with these threats? You mention the threat of predators learning to use AI to become better predators.

As you know, there are no easy answers or simple responses. As a starting place, I think it’s helpful to think about this on two fronts.

One is about preparing human beings for a future in which AI will out-compete humans for many if not most of today’s jobs. What will most help humans survive and thrive in a future in which the machines displace humans from ever more physical and mental labor jobs, and also provide ever better on-demand personalized learning support? In my view, nothing will be more relevant to a person’s success in the future than how well they can learn when they get there. It might be a kind of learning the machines can’t do. It might be a kind of learning that adds value to the machines. It might be a kind of learning through which people adapt to living in a world completely beyond our current imagining. One thing is clear though, the way we educate today is still based on the assumption that what’s being taught is more important than how well learners are learning to be better autonomous learners. In that sense, it’s a gross disservice.

So, one front is transforming our educational system so instead of debating “what to teach them”, we are learning together to steward their “autonomous learning agency”.

The other front is political: it’s about building an awareness campaign / political movement that leads to outlawing predatory capitalism (like we outlawed its ancestor, slavery).

Slavery was a form of predatory capitalism. It reduced humans to ownable objects that could be used in any way their owners wanted. The US civil war outlawed human-property ownership, but not human-asset predation. Today’s predatory capitalism is a subtler form of slavery. It treats human beings as objects of financial value – assets – that can be manipulated into behaviors that generate profit. Armed with AI, this ethic is what most endangers us. It’s going to take a new kind of government – a new kind of capitalism – to rid us of the predators. https://www.implicity.org/ethics/ethics17.htm

See also:

|

Elon Musk: It’s Going to Hit Like an Asteroid |

| What if the Slavers had Nukes? |  |

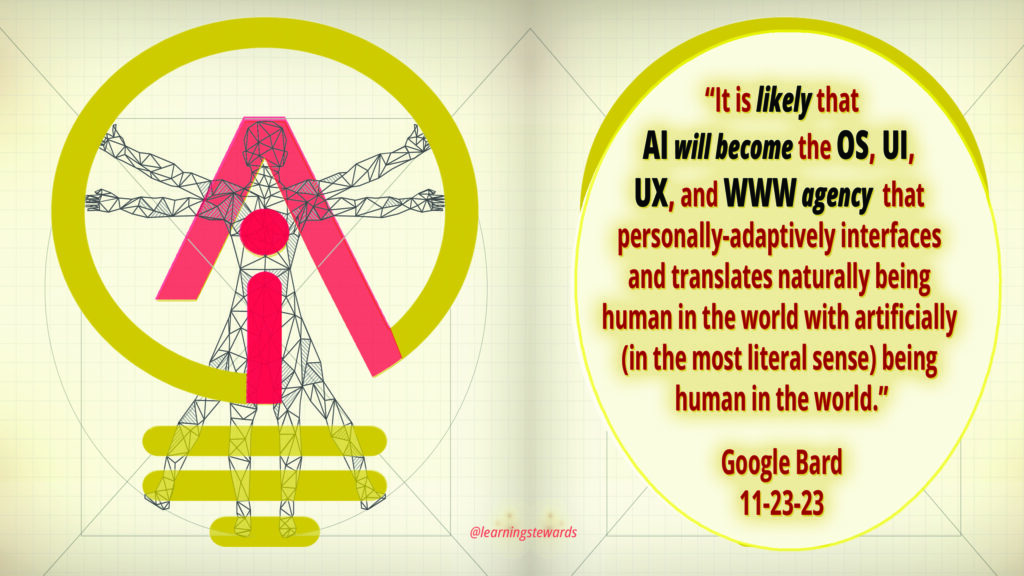

| AI: The Future of Human Interface |

|

| Preventing AI Enhanced Predation |

|

[…] (see post: The A.I. Endangering Us Today) […]

[…] The A.I. Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] The A.I. Most Endangering Us Today […]

[…] Predatory AI […]